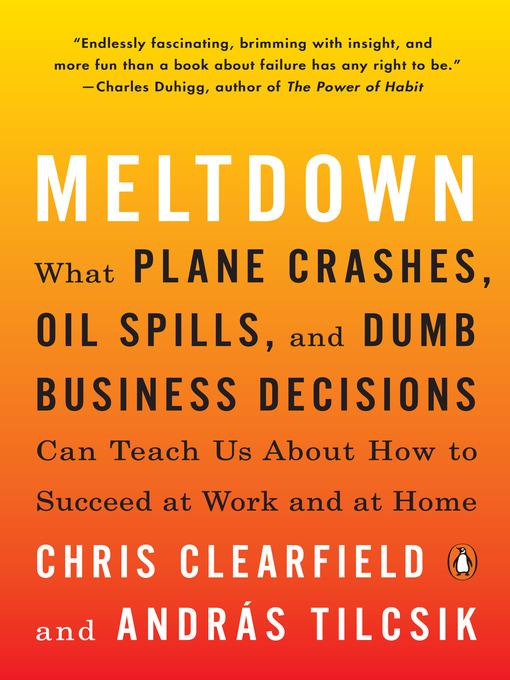

Meltdown

What Plane Crashes, Oil Spills, and Dumb Business Decisions Can Teach Us About How to Succeed at Work and at Home

کتاب های مرتبط

- اطلاعات

- نقد و بررسی

- دیدگاه کاربران

نقد و بررسی

January 15, 2018

Clearfield, a former derivatives trader, and Tilcsik, a management academic, share cautionary tales of disaster resulting from small vulnerabilities in large systems. Their analysis is enlightening but they flounder in translating their insights into usable takeaways. Clearfield and Tilcsik’s approach centers on sociologist Charles Perrow’s 1984 theory that as a system’s complexity and “tight coupling” (a lack of slack between different parts) increase, the “danger zone” does as well. Clear, well-paced storytelling around diverse events, including a fatal D.C. Metro train accident, Three Mile Island, the collapse of Enron, Volkswagen’s emissions cheating, the Flint water crisis, the 2017 Oscars mix-up, and the commandeering of a Starbucks hashtag by the company’s critics, will keep readers interested, whether or not they are invested in the organizational lessons. The solutions offered, however, tend toward the less-than-revolutionary: keeping decision-making parameters clear, increasing workforce diversity, and building organizational cultures in which dissent is genuinely encouraged. Clearfield and Tilcsik’s most important warning is about the “normalization of deviance,” when people come to redefine commonly encountered risks as acceptable, as can occur when automated warnings constantly cry wolf in hospitals. This manual articulates the ubiquitous nature of system failure well, but its approaches to “reducing complexity and adding slack” are too vague to be practically implementable.

January 15, 2018

If it can be built, it can fall apart: a cautionary study in how complex systems can easily go awry.As systems become more complex, guided by artificial intelligence and algorithms as well as human experience, they become more likely to fail. The result, write one-time derivatives trader and commercial pilot Clearfield and Tilcsik (Univ. of Toronto Rotman School of Management), is that we are now "in the golden age of meltdowns," confronted on all sides by things that fall apart, whether the financial fortunes of entrepreneurs, the release valves of dam plumbing, or the ailerons of jetliners. The authors examine numerous case studies of how miscommunications and failed checklists figure into disaster, as with one notorious air crash where improperly handled oxygen canisters produced a fatal in-flight fire: "The investigation," they write, "revealed a morass of mistakes, coincidences, and everyday confusions." Against this, Clearfield and Tilcsik helpfully propose ways in which the likelihood of disaster or unintended consequences can be lessened: cross-training, for instance, so that members of a team know something of one another's jobs and responsibilities, and iterative processes of checking and cross-checking. At times, the authors venture into matters of controversy, as when they observe that mandatory diversity training yields more rather than less racist behavior and suggest that "targeted recruitment" of underrepresented groups sends a more positive message: "Help us find a greater variety of promising employees!" Though the underlying argument isn't new--the authors draw heavily on the work of social scientist Charles Perrow, particularly his 1984 book Normal Accidents--the authors' body of examples is relatively fresh, if sometimes not so well remembered today--e.g., the journalistic crimes of Jayson Blair, made possible by a complex accounting system that just begged to be gamed.Programmers, social engineers, and management consultants are among the many audiences for this useful, thought-provoking book.

COPYRIGHT(2018) Kirkus Reviews, ALL RIGHTS RESERVED.

دیدگاه کاربران